Build Trustworthy AI

Technical documentation for the Tikos Reasoning Platform - a suite of API endpoints to help achieve compliance for AI models.

(our corporate website)

Integrate Tikos with any model for transparency & explainability

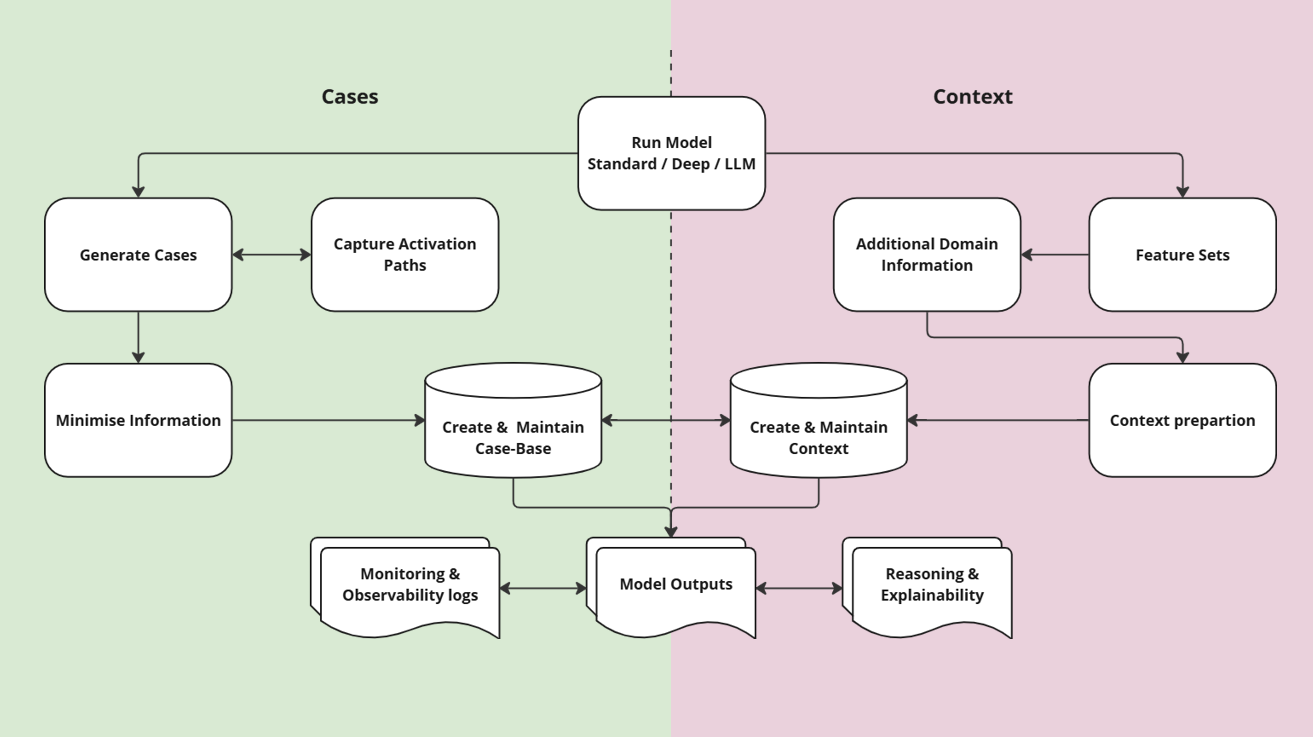

To help achieve compliance, Tikos implements a form of case-based reasoning, and creates two data assets for each model it is applied to:

'Cases' enables transparency for every decision output

Cases are a proprietary data structure that capture relevant information at inference/run time. This can include activation path information from deep-neural networks. Cases are then optimised through information minimisation and serialised for efficient indexing, searching, retrieval, matching, and adaptation; resulting in monitoring and observability logs for individual decision outputs - for any class of model.

'Context' enables explainability for every decision output

Model features are combined with broader relevant domain information and represented in a knowledge graph, or other datastore. At inference time, matched or adapted Cases relating to individual model output decisions are then explained using the Context.